This post explains the idea of LLM Council (Local & Free) in plain English.

Download: https://github.com/MrSchmaltz/llmcouncil/

No buzzwords.

No hype.

No sales pitch.

Just a clear explanation of what it is, why someone might want it, and how it works.

You do not need to be an AI expert to understand this. If you can use a chat app and read basic instructions, you can follow along.

A Simple Problem to Start With

Most people use AI like this:

You open one app.

You ask one question.

You get one answer.

That answer might be good.

It might be wrong.

It might miss something important.

You usually do not know which.

Some people solve this by asking the same question again and again.

Others copy the question into different AI tools and compare answers by hand.

This works, but it is slow and messy.

The LLM Council idea exists to make this easier.

What “LLM Council” Means (In Simple Terms)

An LLM is a large language model.

That is just a fancy name for a text-based AI that answers questions.

A council is a group that discusses something together.

So an LLM Council means:

A group of AI models that all answer the same question, look at each other’s answers, and help produce one final reply.

Think of it like this:

Instead of asking one person for advice, you ask several people.

Then you let them review each other’s advice.

Finally, one person writes a summary using the best parts.

That is all this project does.

What “Local & Free” Means Here

This version of LLM Council focuses on two things:

Local

The system can work with AI models running on your own computer or local network.

Free

You are not locked into paid services. The design allows you to use models that do not require subscriptions.

This is not about replacing big companies.

It is about having control and flexibility.

What This Project Is (And Is Not)

Let’s be honest and clear.

This project is:

- A test of an idea

- A learning tool

- A way to compare AI answers more easily

- A simple web app that looks like a chat tool

This project is not:

- A finished product

- A perfect answer machine

- A fast or optimized system

- A secure enterprise solution

It is meant for experimentation, not perfection.

Why Ask More Than One AI?

Different AI models are good at different things.

One might:

- Explain things clearly

- Be careful and accurate

- Give creative ideas

- Miss obvious details

Another might:

- Be brief but correct

- Catch mistakes

- Focus on practical steps

- Ignore edge cases

When you only ask one model, you only see one point of view.

When you ask many, patterns appear:

- Common agreement

- Clear mistakes

- Strong explanations

- Weak reasoning

LLM Council makes this visible.

The Basic Flow (Big Picture)

Here is the full process, without technical details.

- You ask one question.

- Many AI models answer it separately.

- Each model reads the others’ answers.

- They rank the answers.

- One final answer is written.

That’s it.

No tricks.

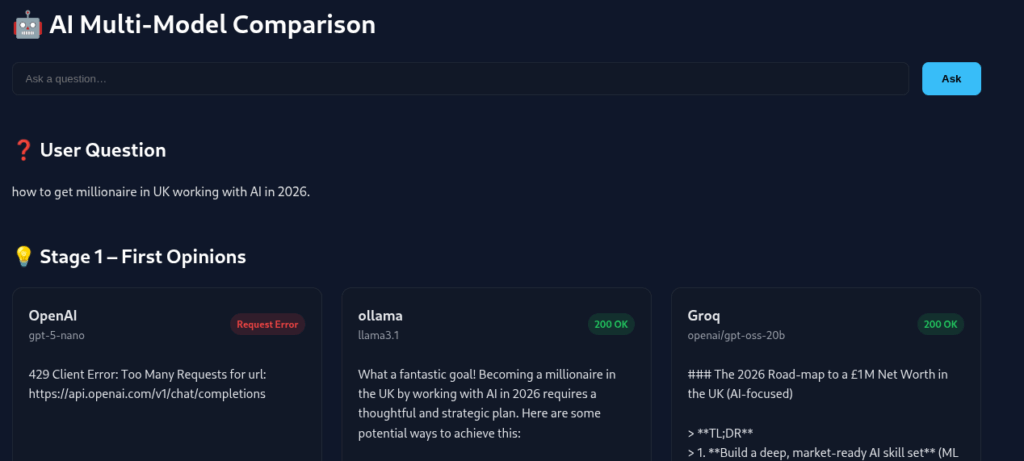

Step 1: You Ask a Question

You type a question into a simple web page.

It looks like a normal chat box.

You do not need to format anything.

You do not need to select models manually (unless you want to).

You just ask.

Example:

“Explain what photosynthesis is in simple terms.”

Step 2: Each Model Answers Alone

Your question is sent to multiple AI models.

Important detail:

They do not see each other’s answers yet.

Each model:

- Reads the question

- Produces its own reply

- Works independently

This avoids copying or bias at this stage.

Step 3: You Can See Every Answer

All answers are collected and shown to you.

They are displayed in a tab view.

This means:

- One tab per model

- Click to switch between them

- Read each answer separately

You can stop here if you want.

Even without the next steps, this alone is useful.

You can:

- Compare explanations

- Spot differences

- Decide which answer you trust

Step 4: The Models Review Each Other

Now comes the interesting part.

Each AI model is shown the other answers.

But there is a rule:

The model names are hidden.

This means:

- No favoritism

- No “I know this model”

- Just judging the text itself

Each model is asked to rank the answers based on:

- Accuracy

- Insight

- Clarity

They do not rewrite anything yet.

They only review and rank.

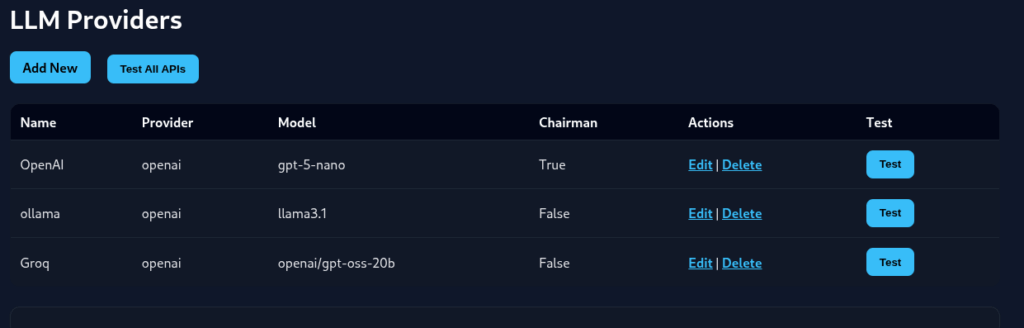

Step 5: One Model Becomes the “Chairman”

One model is chosen as the Chairman.

This does not mean it is smarter.

It just has a different role.

The Chairman:

- Reads all answers

- Reads the rankings

- Combines the best parts

- Writes one final reply

This final reply is what most users will read.

Why Use a Chairman at All?

Without a final step, you would still need to decide.

The Chairman’s job is to:

- Remove repetition

- Fix mistakes

- Merge strong explanations

- Produce a clean answer

Think of it as an editor, not a boss.

What You See as a User

From the user’s point of view, the experience is simple.

You see:

- Your question

- Tabs with individual answers

- A final combined answer

You are not forced to trust the final answer.

You can always:

- Read the raw responses

- Compare them yourself

- Ignore the Chairman if you want

Nothing is hidden from you.

Why This Is Useful for Learning

This system is especially helpful if you are:

- Studying something new

- Unsure which answer to trust

- Curious about how AI “thinks”

- Testing prompts or questions

Seeing multiple answers side by side teaches you more than one polished reply.

You see:

- Different wording

- Different focus

- Different mistakes

That is valuable.

Local Models: Why They Matter

Running models locally gives you:

- More control

- No dependency on one provider

- The ability to experiment freely

Local models are not always as strong as large hosted ones.

That is okay.

The point here is not perfection.

The point is comparison and control.

The /apis Folder Explained Simply

All models are connected through small pieces of code called adapters.

These live in the /apis folder.

Each adapter does three simple things:

- Sends the question to a model

- Receives the answer

- Formats it in a standard way

That is all.

This design makes the system flexible.

Adding a New Model (Conceptually)

You do not need to change the whole app.

You just:

- Create a new file in

/apis - Follow the same structure as others

- Register it

The rest of the system does not care where the model comes from.

This keeps things simple and clean.

No Lock-In by Design

This project does not force you to use:

- One company

- One service

- One type of model

You can mix:

- Local models

- Remote models

- Experimental setups

If a model stops working, you remove it.

Nothing breaks.

Why This Is Not About “Best AI”

This project is not trying to answer:

“Which AI is the best?”

That question changes all the time.

Instead, it asks:

“How can we compare answers more fairly?”

And:

“How can we reduce blind trust in a single output?”

The council approach encourages critical reading, not blind belief.

Limits You Should Be Aware Of

This system has limits.

Some examples:

- If all models are wrong, the final answer will still be wrong

- If models share the same bias, it will not disappear

- Slower than asking one model

- More complex than a simple chat app

These are trade-offs, not bugs.

Why This Is Still Worth Exploring

Even with limits, this approach offers something useful.

It:

- Encourages transparency

- Shows uncertainty

- Makes AI behavior visible

- Helps users think, not just consume

That alone makes it worth testing.

Inspiration and Credit

This idea was inspired by the GenAI LLM Council project by Ines756.

That project showed how:

- Multiple models can work together

- Review stages can improve output

- A council structure can be built

This version adapts the idea to:

- Focus on local use

- Avoid paid dependencies

- Keep things simple and open

Who This Project Is For

This is for people who:

- Like to experiment

- Want to learn how AI answers differ

- Care about control and flexibility

- Enjoy building and testing ideas

It is not aimed at:

- Enterprise deployment

- High-stakes decisions

- People who want one-click perfection

Final Thoughts

LLM Council (Local & Free) is not magic.

It does not make AI smarter.

What it does is:

- Slow things down

- Show more context

- Let you compare before trusting

In a world where AI answers are often taken at face value, that is a useful change.

If nothing else, it reminds us of a simple truth:

One answer is never the whole picture.

And sometimes, asking a small council is better than asking a single voice.

No responses yet